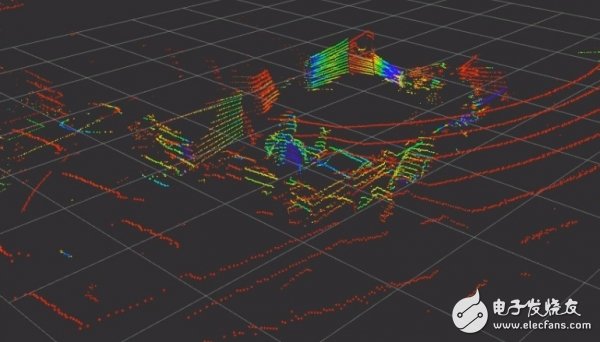

Uber recently caused a bicycle cyclist to be caught in a car accident. Many people believe that the responsibility for low system capacity should be borne by Uber, but some people believe that the accident itself is not worth making a fuss. In my opinion, such accidents can indeed be avoided by technical means. However, why is this problem more difficult to solve for automated driving systems? So first, let's answer the question - "Why is Uber not using a night vision system equipped with Mercedes-Benz in his car?" First of all, I need to emphasize that I don't know the specific cause of the accident and I will not identify it as an unavoidable situation. In addition, I am not going to blame anyone in this article, or to prove the cause of any anecdote. In other words, I am simply discussing why this problem is more difficult to solve for artificial intelligence systems than in conventional driving situations. On almost all new vehicles, we can find a conventional collision avoidance (CA) system. The system works very singularly, or has only one goal - to brake when the vehicle is about to collide. This "determinism" means that it will take the corresponding action (ie, braking) when it detects a particular signal. For the same signal, it always produces the same response. In addition, there are some collision avoidance systems that take some probability-based judgments based on the environment, but in general, collision avoidance systems are usually very simple: they trigger a brake when the vehicle approaches a position at an unreasonable speed. You can do this by using a simple IF statement in your program code. So why can't artificial intelligence systems do this? Artificial intelligence is a system that demonstrates its cognitive skills, such as learning and problem solving. In other words, artificial intelligence does not rely on a pre-programmed way to monitor known input signals from sensors to take pre-defined actions. This means that, unlike previous methods of pre-defining known situations, we now need to provide a large amount of data for the algorithm to implement artificial intelligence training, and thereby guide it to learn how to operate - this is the basic principle of machine learning. If we use machine learning technology to build a collision avoidance system, it can achieve near-perfect results - but it also means that it still belongs to a single-purpose system. It can brake, but it does not learn to navigate. Navigation functions consist of sensing and interpreting the environment, making decisions, and taking action. Environmental awareness includes path planning (where to go), obstacle detection, and trajectory estimation (how the detected object moves). There are many more than that. It can now be seen that collision avoidance is just one of many tasks that it needs to accomplish. The system faces many problems at the same time: where do I go, what I see, how to explain these scenes, whether objects are moving, how fast they move, whether my tracks will cross others' routes, etc. . This autonomous navigation problem is too complex to be easily solved by using IF-ELSE statements in the program code and reading with sensor signals. why? Because you need all the data you need to get the job done, you must have dozens of different sensors in your vehicle. The goal is to create a comprehensive vision while making up for the deficiencies of a single sensor. If we now estimate the combined number of measurements that these sensors can produce, we can see how complex this automated driving system is. Obviously, simulating each of these possible input combinations has far exceeded the processing power of the human brain. In addition, this self-learning system is likely to be guided by probability. If it notices something on the road, it considers all potential options and attaches a corresponding probability to it. For example, if the probability of an object being a dog is 5% and the probability of an object being a truck is 95.7%, then it will judge the other party as a truck. However, what should I do if there is a contradiction in the input given by the sensor? This situation is quite common. For example, a normal camera can clearly capture close-up objects, but only in two-dimensional form. Lidar is a type of laser emitter that can see the same object in three dimensions, but the observations lack detail—especially color information (see the figure below). Therefore, we can use multiple cameras to shoot from multiple angles to reconstruct a 3D scene and compare it to the radar "image". The combined results thus obtained are clearly more reliable. However, the camera is very sensitive to lighting conditions - even a small amount of shadows can interfere with certain parts of the scene and result in poor output quality. As an excellent identification system, it should be able to rely more on the input of the radar system in this case. In other cases, it is more dependent on the camera system. The part of the two types of sensors that lead to consensus conclusions is the most credible judgment. Photo: I am sitting in the office chair (in the center of the image) waving the Velodyne VLP-16 radar. The laser radar used in self-driving cars has a higher resolution, but still cannot match the camera. Please note that this image is from a single radar scan and we can perform multiple radar scans to further enhance image resolution. So, if the camera recognizes the target as a truck, but the radar thinks it is a dog, and the credibility of the two conclusions is equal, what will happen? This is actually the most difficult and unsolvable situation. Modern collision avoidance systems use a memory mechanism that includes maps and deposits that the vehicle has seen. It tracks the recorded information between images. If two sets of sensors (more precisely, two algorithms that interpret sensor counts) are considered to be trucks between two seconds, and one of them later considers them to be dogs, then the target will still be considered a truck - Until there is stronger evidence. Please keep in mind this example, which we will mention again when discussing the Uber event later. Let's review it here. Earlier we have described that artificial intelligence must process input from many different sensors, evaluate the quality of sensor input and build contextual cognitive conclusions. Sometimes different sensors give different predictions, and not all sensors provide information at any time. Therefore, the system will establish a set of memory mechanisms that influence the judgment process, just like humans. Thereafter, it needs to fuse this information to make a consistent judgment of the current situation and drive the car. Sounds good, then can we trust such an AI system? The quality of the system depends on the level of integration of its overall architecture (which sensors are used, how the sensor information is processed, how the information is fused, which algorithms are used, and how decisions are evaluated, etc.) and the nature and amount of data used and used in training. Even if the architecture is perfect, if we provide too little data, it can make serious mistakes. It's like assigning an inexperienced staff to perform a daunting task. The larger the total amount of data, the more learning opportunities and better decisions the system will have. Unlike humans, artificial intelligence can bring together the experience accumulated over hundreds of years and ultimately provide better driving skills than any individual. So, why does such a system cause casualties? In the following article, we will mention a lot of possible situations, and the wrong evaluation conclusions may lead to accidents. We will also explore the circumstances under which artificial intelligence systems are more likely to make misjudgments. · First, if the system fails to see enough similar data, the current situation may not be properly understood. · Second, if the current environment is difficult to perceive, and the sensor input content is not highly reliable or the signal is mixed, it may lead to misjudgment. · Third, if the understanding of the sensor input contradicts the understanding based on system memory (for example, if the object is identified as a truck in the previous time step, but the sensor in the latter step judges it as a dog), then Causes a wrong judgment. · Finally, we cannot rule out the possibility of other failure factors. That's right, any system with a well-designed system can handle one of these types of problems separately, however: · It takes time to resolve conflicts; · The combination of multiple factors can lead to erroneous decisions and behaviors. Before we delve into the specific situation, let us briefly introduce what modern sensors can do and what they can't do. Many people say that today's technology is so advanced, so Uber should be able to clearly identify the pedestrians who are passing, including those who have turned around because of the wrong way, or suddenly rushed into the illuminated area from the dark. So, what can the sensor measure and which scenarios can't be handled? Here, I am talking about simply measuring, not understanding the ability to measure content. · The camera cannot observe things in the dark. A camera is a passive sensor that only records things in a lighting environment. I put this one at the forefront because there are already many powerful cameras that can shoot normally in dark environments (such as HDR cameras). However, this type of equipment can adapt to the weak light rather than the matte environment. For the light-free environment, although infrared and infrared-assisted cameras can solve the problem, radar is used in autonomous vehicles to replace such devices. Therefore, most cameras used in self-driving cars still cannot "see" things in the dark. · The radar can easily detect moving objects. The difference in wavelength of the reflected wave caused by the moving target under the Doppler shift effect when the radio wave is reflected back from the object. However, conventional radars have difficulty measuring small, slow-moving, or stationary objects—because there is only a small difference between the waves reflected from a stationary object and the waves reflected from the ground. · Lidar works like a normal radar, except that it emits laser light to easily draw any surface in three dimensions. In order to increase the three-dimensional imaging range, most laser radars continue to rotate, continuously scanning the surrounding environment like a copier scans paper. It does not rely on external lighting and can accurately detect targets in dark conditions. However, while high-end lidars have excellent resolution levels, they need to work with powerful computers to reconstruct 3D images. So if a vendor claims that its lidar can operate at 10 Hz (that is, 10 scans per second), remember if they can provide 10 Hz of data processing power. Although laser radar is used in almost all autonomous driving systems on the market, ElonMusk believes that laser radar is only of a short-term significance, so Tesla does not use this technology. · Infrared rays can distinguish objects by temperature, so they are obviously very sensitive to temperature. If the sun shines, it may not be able to distinguish the difference between different objects, because infrared rays have a limited effect in the automatic driving system. · Ultrasonic sensors are ideal for collision avoidance systems at low speeds. Most parking sensors use ultrasonic technology, but their working range is very small, so if you use it for collision avoidance, it often leads to the time when the target is found. Because of this, Tesla and other manufacturers who want to introduce solutions into the highway driving environment are more inclined to choose radar. 1. Let's first take a look at this photo. What did you notice in it? Photo: In the urban area where skyscrapers are towering, people are crossing the crosswalk. Have you noticed the pedestrians riding a bicycle? He obviously didn't plan to grab the road with the car, but stopped to wait for the car to pass before moving on. Figure: Part of the content from the above picture, pedestrians riding bicycles. So the first possible explanation is that the algorithm sees many cyclists waiting for the car to pass before moving on. Obviously, if an artificial intelligence system chooses to brake as long as it encounters a rider, its quality level is absolutely worrying. So, does it make it possible for a strange cyclist to steal – that is, different from most riders waiting for the car to pass? Although this assumption is very important, there is not enough data to alert the AI ​​system to this situation. Although Waymo announced two years ago (also known as Google Cars) that it can accurately identify cyclists and even their gestures, cyclist detection and prediction is still an open question. 2. The understanding is missing. Take a look at the photo below: Assuming that our system is able to distinguish between the occupants of the road and the occupants who do not give way, the rider in the Uber accident is undoubtedly in the blind spot between the two. This means that only Lidar can detect if it stops in time. Maybe the radar did detect the correct result at the time, but it is still much more difficult to correctly identify the rider from the 3D point cloud than to detect it from the captured image. Does the system recognize it as a rider or other object that moves toward the car? Maybe not, because the system decided to continue driving by referring to normal objects in the other lane. Similarly, if I am a pedestrian in the picture and I am not sure what kind of car I am driving, then I may choose to move on. Of course, if the vehicle continues to rush toward me, it is likely to cause an accident. However, according to life experience, this assumption is not reasonable in 99.99% of cases. What happened after the lighting? We can also make more reasonable explanations about why the system is not fully prepared for collision avoidance. However, why is the system not braking after the rider enters the light environment? In this regard, it is difficult for us to find a simple answer. In some cases, the deterministic system will brake (although it may not be possible to completely avoid collisions). If I do a vehicle test on a regular street, I might add an additional deterministic system as a supplement. But for now, let's focus on the performance of the AI ​​system. As I mentioned before, modern autonomous driving systems have memory functions, and different acquisition standards are required for each sensor depending on the environment. The dark is a very challenging condition because the lack of illumination makes it impossible to operate the camera in real time and have enough confidence in the results. Therefore, AI systems are likely to be more prone to use lidar in nighttime environments. As mentioned earlier, the Lidar's sensed output is 10 times per second, but the processing power depends on the specific system—and generally does not reach the actual frequency of the scan. Therefore, a more powerful laptop may process the scan results once per second (including converting the original input into a three-dimensional image, then finding the object from the image and understanding its meaning). Using specific hardware will increase this speed, but it is still unlikely to reach the 10 Hz level – at least without affecting resolution. Now let's assume that artificial intelligence knows that there is an object in the dark and is more inclined to believe in the data provided by the lidar. When the rider travels to the front of the car, the camera and other sensors will recognize the object. Once the signal is interpreted, the deterministic system will brake. However, in the view of artificial intelligence systems, this information may also include the following meanings: UFO? Atmospheric glow? We need to compare it to the autonomous AI. (1) On the one hand, the system has a sensor that is worth relying on, the laser radar, which tells the system that there is no object in front of the car (due to the slow processing speed, it does not detect the action of the rider in time). (2) It is possible to call the sensor's measurement history, and there is no information indicating that there will be an object that may collide with the car. (3) Finally, some sensors mention obstacles in front of the car. (4) Perhaps the algorithm can classify the obstacle. Now, the decision is in the hands of the AI. We must consider that the artificial intelligence system is based on probability. There is some error rate for each sensor, and after all, the measurement results are not 100% accurate. In addition, there are errors in the corresponding predictions from the sensor data. Why is it wrong? If the camera does not match the judgment given by the lidar (or has not yet been able to match the image), then the system will not be able to obtain accurate 3D data images, so it can only be reconstructed from the camera's 2D image. Let's take a look at the image of the rider in the Uber accident: Figure: A video screenshot of the Arizona Police. Due to the lack of three-dimensional information, the system can only rely on the machine learning model to detect the current target - and it is not clear the distance between itself and the target. Why can't I recognize the rider? Below we present several assumptions: · This person is dressed in a black jacket (see yellow area) that blends in with the nighttime environment. Many people think that modern cameras can completely distinguish black jackets from night backgrounds - yes, but most machine learning algorithms can't use real-time model training with images of 2 to 3 million pixels. In order to shorten the processing, most models use an image resolution of only 1000 x 1000 pixels or even lower. Although some modelers have been able to handle images of 2 million x 1 megapixels, they still cannot detect riders at night. This is because in most cases, the correlation model will still use a lower resolution image or average the pixel values ​​over a region to form a superpixel. Therefore, in fact, only some of the objects in such images can be accurately identified. · If there is no suitable 3D information (providing distance data), the bike may be misclassified - see the green and pink marking notes in the image above. When we humans see the overall image, they will notice this person immediately. Because the bicycle frame (marked with pink dots) is very similar to the car taillights (also marked in pink). Back to the discussion of probability - when driving at night, the algorithm thinks that the specific possibility of encountering the taillights or bicycle frame in front of the car, what is the difference? It has probably seen a variety of different shapes of taillights, but it is rare to see the bicycle frame traversing the front of the car. Therefore, it tends to judge the target as a tail light. · Finally, I marked an orange dot under the streetlight, and you can find the same color and shape from the image. Therefore, if the camera's error rate is too high, the autopilot system may not accept the input. Perhaps the system determines the pink area as a 70% probability for a bicycle and 77% for a taillight - considering the actual situation, such a guess is quite reasonable. Having said that, I have basically clarified the idea: Building an artificial intelligence system with automatic driving ability is definitely a very difficult and challenging task, especially considering the complexity and the available quantity. A highly error-prone training data set. In addition, some of these features are greatly reduced in difficulty if implemented using deterministic algorithms. It should be a good idea to equip early self-driving cars with a deterministic backup system to achieve collision avoidance. In addition, it is entirely certain that no one can use a deterministic approach to build a self-driving car. Finally, I want to reiterate that I am not sure about the specific cause of the accident. Perhaps the actual situation is totally different from my guess. But at least after reading this article, I believe that you will no longer be fooled by the claims on the social media that the accident prevention technology that existed 1, 2, 5 or even 10 years ago is better than the existing autonomous driving system. And although I admit that such facts are difficult to accept, the actual situation proves that the technology that can avoid such accidents does exist, but artificial intelligence systems cannot fully utilize them. In other words, after the accident, there have been research projects that have developed more than 900 hazard scenarios that must be explained for artificial intelligence. So, if I programmed Uber, can I save the rider's life? The answer is that no one knows. The internal structure of modern artificial intelligence systems is too complex to evaluate Uber's data processing mechanisms. In fact, whether the artificial intelligence system comes from me, you, Waymo or Tesla, it will also judge based on probability - otherwise this is not an artificial intelligence system. On the other hand, every intelligent system will inevitably make mistakes and learn from it. Finally, be sure to include the collision avoidance system as a backup solution in autonomous vehicles! The current smart solution is far from perfect, so don't be too hasty! SUNLUX IOT Technology (Guangdong) INC. , http://www.sunluxbarcodereader.com