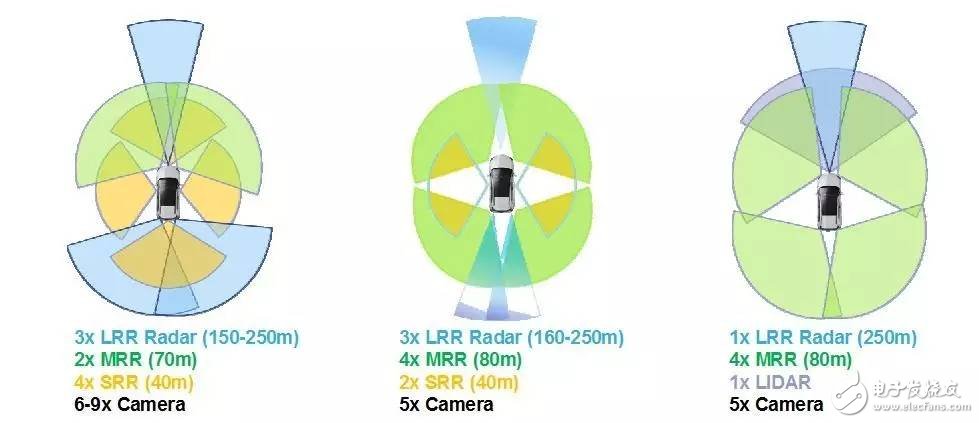

Auto-driving may be the brightest jewel in the high-tech crown of the next 20 years, so people with a bit of identity and ideas in the tech circle want to have enough toes on their toes. Imagine letting the user loosen the steering wheel, get rid of the "bitterness" of driving, and enjoy the "learning" of the ride. It is indeed a very convincing picture. But it is not easy to "paint". An important prerequisite is to find a sensor technology that replaces the human eye, and to perform object detection, classification, traffic signal recognition, distance and speed judgment during driving. In this way, the user is confident to be a reassuring "eyes" and hand over the driving rights. At present, there are three sensing technologies that are expected to replace the driver's eyes: camera, radar (RADAR) and lidar (LiDAR) . Figure 1. Comparison of three sensing technologies for camera, radar and LiDAR for autonomous driving The optical camera is the most similar technology to the human eye imaging mechanism, and is the only one of the three technologies that can acquire detailed image information such as object color, contrast, material, etc., so it has obvious advantages in classification and recognition. At the same time, like the human eye, the camera is easily affected by the external environment, and the weather is good, the ambient light is dark and so on, which will have a fatal impact on its performance. Furthermore, optical sensing is inherently flawed in the detection of relative motion of two moving objects, so it is weak in speed detection. However, the camera has one of the biggest competitive advantages: low cost. The ever-decreasing image sensor cost and mature embedded image processing solution have greatly lowered the application threshold, so many manufacturers use the optical camera as a stepping stone to ADAS and autonomous driving. It is expected that the number of camera units will be 2030 by 2030. It reached 400 million units, one order of magnitude ahead of the other two technologies. As a second candidate sensing technology, radar 's mechanism is to emit electromagnetic waves into space and to detect the state of surrounding objects by monitoring the reflected echoes. The history of radar application in automobiles is not short. Currently, vehicle radar includes short-range radar (SRR, 0.2~30 meters), medium-range radar (MRR, 30~80 meters) and long-range radar (LRR, 80~200 meters). The three types, the former two are familiar with the application of parking assistance and blind spot detection, but in recent years LRR began to show their skills in ADAS, applied to adaptive cruise (ACC), automatic emergency braking ( AEB), collision warning (FCW and BCW) and other systems. However, in reality, radar still has problems in the detection and judgment accuracy of some special driving situations, such as the detection of vehicles that are suddenly inserted in parallel, the distance of vehicles in front of the same lane due to mistakes, etc. This also limits its application space in autonomous driving. Both LiDAR and radar are active detection techniques. The difference is that they emit and are reflected by surrounding objects, not electromagnetic waves, but lasers, so they have obvious advantages in detection accuracy and speed. LiDAR's application in the car has attracted worldwide attention and can be traced back to the 2007 DARPA (United States Defense Advanced Research Projects Agency) Autopilot Challenge. LiDAR has become the standard for autonomous driving research due to its ability to provide a full range of object detection within a 360° 3D field of view. Looking at the LiDAR devices on the roof of the auto-driving prototypes of Google and Baidu, there is always a species "I don't know how to be sharp". But as the "new" of in-vehicle sensing technology, the biggest problem with LiDAR is that it is really a bit "expensive", and some systems even exceed the price of the car, which is hard to let people suffer. Therefore, the current use of solid-state LiDAR (SSL) to replace the scanning LiDAR relying on mechanical rotating devices is a trend. Although the former's perspective (FOV) is limited, the cost is low, the reliability is good, and it is more in line with the "tonality" of consumer-grade automotive applications. Figure 2, three sensor combinations for ADAS and autonomous driving It is not difficult to see that the three sensing technologies in our hands are not perfect compared to the ideals of autonomous driving. Therefore, a feasible solution is to integrate the three and take advantage of each. This has also led to the development of the "Sensor Fusion" technology, which combines the data collected by different sensors for calculation and processing, and accurately identifies the information of the measured object's features, spatial position, and motion state. And judgment. But for autonomous driving, it can't seem to be enough to "see" the surrounding environment. Developers also use V2X technology to give cars a "over-the-horizon" capability. V2X is to let the car establish fast and reliable wireless communication with other cars or surrounding transportation facilities, exchange information with each other, make judgments and make decisions. This can effectively overcome the "visual" dead angle caused by obstacles, and also obtain a wider range of traffic information through other connected cars and infrastructure. At this time, the car is no longer an isolated individual, but becomes the whole wisdom. A node in the transportation system. At this time, to help users see the road, it will be the entire intelligent transportation CPS (Information Physics System). If it comes true, the driver's eyes can really retire. Figure 3. Avnet's ADAS solution based on NXP i.MX6D Avnet's ADAS solution based on NXP i.MX6D enables blind spot monitoring (BSM), forward collision warning (FCW), lane departure warning (LDW), night vision system (NVS), parking assist (PA), pedestrian detection system (PDS), Road Signal Recognition (RSR), and Panoramic Camera (SVC) are a solid step toward the ultimate autonomous drive. Rotor aluminum centrifugal casting is the way usually process with large sized rotors for motors. Centrifugal casting compare to die casting, centrifugal casting has more stable quality with conduct bars and end rings. And it also a more economic way compare to copper rotors, becasue the aluminum is much cheaper than copper. Rotor Core By Aluminum Centrifugal Casting,Laminated Rotor Core,Motor Rotor Core,Rotor Stator Core Henan Yongrong Power Technology Co., Ltd , https://www.hnyongrongglobal.com